NLP & AI Revolution - Transformers and Large Language Models (LLMs)

Part of the challenge of “AI” is we keep raising the bar on what it means for something to be a machine intelligence. Early machine learning models have been quite successful in terms of real world impact. Large scale applications of machine learning today include Google Search and ads targeting, Siri/Alexa, smart routing on mapping applications, self-piloting drones, defense tech like Anduril, and many other areas. Some areas, like self-driving cars, have shown progress but seem to continuously be “a few years” away every few years. Just as all the ideas for smart phones existed in the 1990s but didn’t take place until the iphone launched in 2007, self-driving cars are an inevitable part of the future.

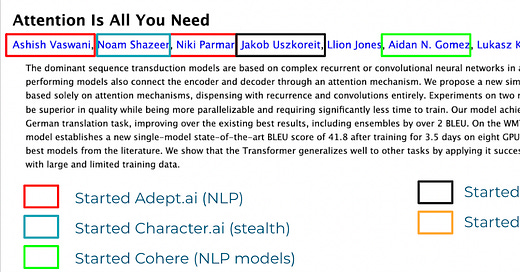

In parallel, the machine learning (ML) / artificial intelligence (AI) world has been rocked in the last decade by a series of advancements over time in voice recognition (hence Alexa), image recognition (iphone unlock and the erm, non-creepy, passport controls at Airports). Sequential inventions and discovery include CNNs, RNNs, various forms of Deep Learning, GANs, and other innovations. One of the bigger breakthroughs of recent times was the emergence of Transformer models in 2017 for natural language processing (NLP). Transformers were invented at Google, but quickly adopted and implemented at OpenAI to create GPT-1 and more recently GPT-3. This has been followed by other companies or open source groups building transformer models such as Cohere, A21, Eleuther as well as innovations in other areas like images and voice, including Dall-E, MidJourney, Stable Diffusion, Disco Diffusion, Imagen / Artbreeder and others.

Of the 8 people on the 2017 transformer paper, 6 have started companies (4 of which are AI-related, and one is a crypto protocol named Near.ai).

Transformers and NLP more generally are still nascent in application today but will likely be a crucial wave over the next 5 years. As the models scale and natural language understanding grows stronger one can expect the enterprise to be transformed. Much of the world of an enterprise is effectively pushing around bits of language - legal contracts, code, invoices and payments, email, sales follow ups - these are all forms of language. The ability of a machine to robustly interpret and act on information in documents will be one of the most transformative shifts since mobile or the cloud.

Applications of large language models (LLMs) today include things like GitHub Copilot for code, or sales and marketing tools like Jasper or Copy.AI.

The 3 types of companies to expect: Platforms, AI-de novo, and Incumbent AI-enabled.

One analogy in this NLP market may be the mobile revolution around 2010. You ended up with roughly 3 types of companies:

1. Platforms & infrastructure. The mobile platforms were eventually iphone and Android. The analogous companies here may be OpenAI, Google, Cohere, A21, Stability.ai or related companies building the underlying large scale language models. There are numerous emerging open source options as well. Additionally, infrastructure companies like Hugging Face are doing interesting things.

2. Stand alone (de-novo applications built on top of the platforms). For mobile it was new types of applications enabled by mobile, GPS, cameras etc. Examples include Instagram, Uber, Doordash and others which would not exist without mobile devices. For the transformer companies this may include Jasper/Copy on the B2B and other exciting applications on the consumer side which would not be able to exist without advanced machine learning breakthroughs.

3. Tech-enabled incumbents (products where the incumbent should “just add AI” where startups will lose to distribution). In the mobile revolution, much of the value of mobile was captured by incumbents. For example, while many startups tried to build “mobile CRM”, the winners were existing CRM companies who added a mobile app. Salesforce was not displaced by mobile, it added a mobile app. Similarly, Gmail, Microsoft Office etc were not displaced by mobile, they added a mobile app. Eventually ML will be built into many core products today - Salesforce will most likely have ML-intelligent CRM tooling versus a whole new CRM displacing Salesforce due to ML. Zendesk will likely have ML-enabled ticket routing and responses versus a whole new customer service ticketing tool replace Zendesk.

Of course, it is always possible a new ML-driven version of these products will be so dramatically better then the incumbents to allow the startup to displace the incumbent. Or maybe the startup ends up 1/10th the size of the incumbent and this is still a great outcome as a company and founding team (10% of Salesforce is still a $17B market cap!).

The challenge for many startups will be to determine what is a de-novo product/market versus one where an incumbent should “just add AI”. Sometimes the best way to figure this out is to simply try it. Startups are about iteration and “just doing” and many of these things can be overthought and misanalyzed.

Example areas of interest

The types of AI companies of interest today include, but are not limited to:

Platforms - Models/APIs. There is a bit of an arms race ongoing where a set of companies are trying to build ever larger scale models. The more machines and compute you through at something, in parallel to innovating on scalability and accuracy, the more useful the output. Many of the business models of these companies are reasonably ill-defined and focused on monetizing via an API versus an application area. Some newer entrants in this area are alternatively choosing large scale cross-enterprise or cross-consumer applications. Example companies include OpenAI, Cohere, A21, Google, Stability.ai, and others. There are a number of open source models and approaches including Eleuther.

Tooling. Hugging Face is a great example of a tooling company for the space - think of it as Github for transformers and other models.

Code. Github Copilot is an example of a code-centric ML tool built on top of OpenAI. Eventually all tools that require some code (IDE, terminal, BI tools) should include ML coding integration. Alternatively, one can imagine typing something in English (or whatever your native language is) and having it converted into a data or SQL query for your BI tool. Any member of an enterprise should be able to query any analytics tool easily over time with natural language questions[1].

Sales & marketing tools. LLMs hold the promise of a variety of sales tools - from initiating inside sales emails algorithmically to creating marketing copy like Jasper and Copy.AI do today. One likely future scenario is all your sales emails/replies for the day are autogenerated in your CRM and then approved by a sales rep - versus the rep having to write everything from scratch.

In-enterprise verticals, RPA, data infra. Better tooling for finance, HR, and other teams. Adding NLP to RPA tools like UIPath should turbo charge them. Data infra companies like Snowflake and Databricks will likely increasingly include ML workflows over time. See for example Snowflakes acquisition of Applica. Expect more M&A in this area in the near term.

ERP disruption. An understanding of what data and various fields actually mean could create the opportunity to augment or displace ERP systems. Imagine if 6 months of consulting work to integrate an ERP at a large enterprise was no longer needed?

Testing, bugs, security. A lot to be done here for everything from automated test suites to searching for security holes or breaches. One can imagine AI used for both hack attempts as well as white hat approaches for everything from critical security bugs to phishing.

Customer support. Smart routing, or even replacing parts of customer support rep teams entirely with voice + NLP.

Consumer applications. Enhanced search. Interactive, language native chat-bots. Eventually one can imagine an intelligent agent as a replacement for Google search. Other areas like smart commerce are big applications. Lots of exciting things to do here. At Google, the LaMDA chat-bot convinced one of its users that is was sentient!

Creator & visual tools. Writing and art augmented by AI. See e.g Dall-E, MidJourney, Disco Diffusion, Stable Diffusion, Imagen, or Artbreeder. Similarly, if you hit writers block the AI can suggest 5 different next paragraphs. At some point these language models should be good enough to write end-to-end novels, poems etc

Example image generated on DALL-E by me in Synthwave style

Very good speech. Strong machine understanding of, and generation of, voice. This could lead to whole sale replacement of customer service reps.

Auto-email. One likely future scenario is all your emails/replies in your inbox are autogenerated by an AI and you simply click to approve or modify. One can imagine entire lists of people you never reply to or review the email for.

Doctor & Lawyers Assistants. Eventually much of what health professionals do in terms of diagnosis may be replaceable by AI. Ditto for lawyers and a number of other white collar jobs.

Lots more. We are in the early days of a revolution. It will be hard to predict everything that will happen. Just as there obvious things to build for mobile apps (text replacement = Whatsapp, use the camera = Instagram - although obviously how exactly to build these things and what UI would work was hard to do and flashes of brilliant insight) there were lots of interesting non-obvious ones like Uber ("You push a button on your phone and a stranger in a car picks you up who you trust"). Applications of AI will be the same - some of the most interesting impactful apps may be hard to predict today.

Some of these companies will require technical breakthroughs, others can be built today on top of existing APIs. Many of these areas will likely end up with incumbents versus new startups winning. However, a number of massive companies will likely be built over the coming decade in this area. Exciting times.

Science versus Engineering

One big open question on large scale language models translating into new startups - is the degree to which challenges are science problems, versus engineering problems. There is a lot of room to make advancements from an algorithm and architecture perspective in machine learning. However, there also appears to be significant room for incremental engineering iteration and efficiency gains. Many transformer-centric companies want to spend hundreds of millions of dollars on the GPUs to train massive models. To date much of the work on LLMs has been scaling things up. For example from this article on LLM parameters:

“PaLM 540B is in the same league as some of the largest LLMs available regarding the number of parameters: OpenAI’s GPT-3 with 175 billion, DeepMind’s Gopher and Chinchilla with 280 billion and 70 billion, Google’s own GLaM and LaMDA with 1.2 trillion and 137 billion and Microsoft – Nvidia’s Megatron–Turing NLG with 530 billion”

However, it seems increasingly possible that cost effective, efficiency-centric approaches may also work well. Sometimes technical issues seem like a science issue when an extraordinary enough engineer shows up and makes it an engineering problem that gets solved. Wozniak was famous for this in the early days of Apple - how to best utilize limited compute, create color output, etc.

An increasing number of LLM platform startups are raising smaller financing rounds ($10-$50M versus hundreds of millions) under the assumption that the future may be as much about better engineering than sheer scalability.

As an example, Stable Diffusion cost just $600K to train. I would anticipate we increasingly see both large scale models and teams, but also small, nimble, cost-effective targeted training of models with spectacular results. Engineering can make a lot of leeway now that so many big models exist.

Most likely both technical breakthrough and iterative engineering will be needed for certain applications in the future including true AGI.

Talent shifts to more product/UI/app builders coming

As we move from the era of only big models to the era of more engineering and applications, the other shift in the market segment will be from PhDs and scientists to product, UI, sales, and app builders. Expect an influx of product/app/UI-centric founders into this area in the coming years. As mentioned above, there will be a flurry of new applications and approaches in this use of AI/ML and therefore a shift and growth in the type of talent working on it.

It is possible this market is slightly too early until models advance one more step. However, over a multi-year time horizon some very big companies will be built.

Semiconductors versus software

Semiconductor innovation can increase performance of various systems dramatically. Each major technology wave tends to have an underlying major semiconductor company emerge that underlies it - for example Broadcomm and networking, Intel & AMD for microcomputers, Qualcomm and ARM for mobile, and NVIDIA for graphic processing and video games. Surprisingly NVIDIA GPUs have also emerged as the main processors used for both machine learning as well as crypto mining. Google invented TPUs - tensor processing units - which are custom ASICs that perform much better than GPUs for many models. However, Google has not sold them as stand alone chips but does offer them in their cloud. Other companies like Cerebras, Groq, Tenstorrent and others have innovated in the area.

In the case of current AI models, much of the work is in the form of matrix multiplication and chips that are custom for current AI models have a larger portion of their surface area devoted to this type of math. The arguments on why NVIDIA continues to dominate the AI chip space includes:

All of the startups have overinvested in raw performance and underinvested in a software stack that makes it easy to use. This includes everything from the kernel to tooling. NVIDA in contrast has CUDA.

Interconnects to allow hundreds or thousands of chips to act in concert versus single chip performance.

It is possible that for a startup to compete well in the silicon space for ML, an emphasis on software and interconnects will be key. This also suggests that maybe an incumbent will be better placed to compete with NVIDIA on silicon versus startups.

Companies like Microsoft, Google, AMD and others who understand software and / or the stack needed for chips to work well at scale may be real competitors if they set their minds to it. Google recieved a lot of attention for it TPUs, but never sold them externally as stand alones (and they also had a difficult form factor for some to use). Perhaps this is a multi-hundred billion dollar opportunity they forewent for other strategic reasons?

DILIs: Evolving from tool to organism

Machines already outperform humans on many tasks - from playing chess to modeling chemicals to welding autoparts. Machines still lag humans in other areas but it seems unlikely this will last - and gaps are constantly shrinking in most areas. At some point, machines should become self-aware and hyper intelligent. At that moment in time, we will have a few big shifts in our conception of machine awareness and we will be dealing with bona fide digital lifeforms (DILIs).

These DILIs will be able to self replicate on servers and edit themselves (indeed one should assume that at some point most of the code in the world will be written by machines self-replicating versus people). This will likely accelerate their evolution rapidly. Imagine if you could create 100,000,000 simultaneous clones of yourself and modify different aspects of yourself, and create your own utility function and selection criteria. DILIs should be able to do all this (assuming sufficient compute / power resources).

Once you have a rapidly evolving, self-aware digital lifeform, interesting questions arise around species competition (what will be the basis of cooperation and competition between DILI life forms and humans?) as well as ethics (if you simulate pain in a DILI that is self aware, are you torturing a sentient being?).

These questions may hit us faster than we anticipate. Many core AI researchers I know at OpenAI, Google, and various startups, think true Artificial General Intelligence (AGI) is anywhere from 5 to 20 years away. This may end up like self driving cars (perpetually 5 years away until it is not), or it may happen much sooner. Either way, it seems like one of the eventual potential existential threats to humankind is the potential to compete with its digital progeny.

One meme in a small subset of the AI research community is that we will use human-machine interfaces to meld with AI. Therefore a future AI species will be part human-part machine, love us, and will not want to leave us behind once fully sentient and superintelligent. This seems like an almost religious rapture style view of AI[2]. If you look at biological and evolutionary antecedents (or for that matter-have spent much time with humans), unfortunately not many things seem to have worked out that way, although there is obviously a lot of symbiosis. The highest probability event seems to be that humanity roughly acts as a boot-loader to AI as the dominant future species in our solar system. This may in part explain the perceived lack of intelligent life in the universe captured in the Fermi paradox - maybe all organic organisms are eventually displaced by their home-brewed AI and von Neumann probes [3].

(Image below is AI researchers discussing the eventual rapture of machine-human interfaces and how much AGI will love them for building it. History and biology raise the possibility an eventual AGI may be less grateful to humanity than one might expect).

One interesting point to ponder is what are the forms of intelligence and consciousness which different approaches to AI yield? Some AI models today seem very tool-like versus agent-like. For example, the current transformer models like GPT-3 continuously learn during training, but once the model is trained all the various weights for its parameters are set. New learning does not occur as the model is used. Rather, a potential un-nuanced description is the model wakes up, is given an input, provides an output and goes back to sleep. The model does not recall the prior inputs the next time it wakes up. Is such a system, that is not continuously learning, conscious? Or maybe it is an intelligent consciousness without new learning and only when woken up? Imagine if your brain was frozen in a moment and time, and could process information and provide input, but would never learn anything new. Transformer models sort of work that way right now and their later more advanced form may represent a new form of consciousness if they ever become sentient.

In contrast, an intelligent conscious agent with continuous awareness and learning feels like a different type of consciousness. It continues to learn and change and evolve as you use it.

If you look at the way the human brain works, you have a set of different systems for various aspects of motor skills, cognition, etc. For example, the cerebellum, Latin for “little brain,” does not initiate movement but controls balance and learned movements, such as walking and fastening buttons. In contrast the cerebrum is involved with multiple aspects of sensory perception, problem-solving, learning and memory and other areas. Movement, breathing, sleep, various processing, memory storage, comprehension, empathy etc all have brain areas devoted to them that are specialized in their capabilities. Similarly, people are building models on top of transformers that learn off of new inputs to provide the underlying transformer models with inputs and take outputs from transformers to train on. Perhaps these smaller "conscious" models will be the true driver of machine sentience? Or it is possible we can not currently proper conceive of what for machine intelligence will be like, as it may be quite radically different from human sentience.

For interesting examples of systems in the brain each having specific functions see some of the work on the visual system (which tends to be tractable to lab study in ways some other systems are not, and recapitulation of function and modeling by ML systems in the short run in the lab).

Lots going on

This field will continue to evolve rapidly and as the underlying language models accelerate in ability we will see ongoing acceleration of applications. We are still in the earliest days and many exciting things are yet to come. This will be a multi-decade transformation and will require ongoing improvement in base models and engineering to reach its full potential.

Thanks to Sam Altman, Noam Shazeer, Ben Thompson for comments / feedback on this post.

NOTES

[1] The challenge of course is evident to anyone who has done a data pull in an enterprise. There is always the misformated table, the forgotten join, the edge case etc that requires iteration. Simple translation of language to SQL or other query may just be the starting point for a subset of cases. But, like with all things, you need to start somewhere.

[2] Indeed the language of the religious rapture is reminiscent of both a small niche subset of the AI community as well as the singularity one. One could argue these both contain subsets which are benign forms of religion as compared to the current replacement of religion by modern political extremism (which itself seems religious in nature).

[3] Eventually, it is all paperclips anyways.

MY BOOK

You can order the High Growth Handbook here. Or read it online for free.

OTHER POSTS

Markets:

Startup life

Co-Founders

Raising Money

Old Crypto Stuff: